We continue to hear about patient satisfaction. The falsified wait times placing the VA in a state of disrepute serves a good example as any. Quality measures may be valid under study conditions, but if used improperly or applied in a dysfunctional environment, they help no one.

However, we hew to their power, and the data sometimes compel us to work the score, not the patient. Not news to any of us who feel the impact of quality reporting—through how we receive our compensation or indirectly via the pressure brought to bear by hospital leadership to up the grades.

Despite reassurances I have received from a number of folks who helped design the patient satisfaction surveys we use now (validity), I am still left wanting as to whether they reflect our efforts as the doctors of record. To clarify, I speak of physician performance only as a sub-domain of the composite scores payers currently dissect.

However, despite the bolstering from the survey assessors, why do the tests feel wide of the mark? Colleagues I speak with sense the results of the physician evaluations have small meaning; place little faith in their veracity; and would not judge another physician based on the results. Because a patient sees innumerable faces during their stay, often has difficulty identifying their primary caregiver in the hospital (even when given pictures), has one bad encounter with doctor #7 (of 16–blemishing a purposeful stay), and completes the survey weeks after discharge, most of us dismiss the results.

Ask any inpatient provider. They will tell you. They will not push back because they feel disgruntled or don’t wish to be measured, far from it. They will push back because they see the surveys’ operate below par in the trenches.

Well, it took long enough, but I want to direct you to a study just released from the HM group at Johns Hopkins: Development and validation of the tool to assess inpatient satisfaction with care from hospitalists. The study deserves a look by all—if not just for trial at your facility, but to envision what might serve our needs more properly in the future as VBP expands.

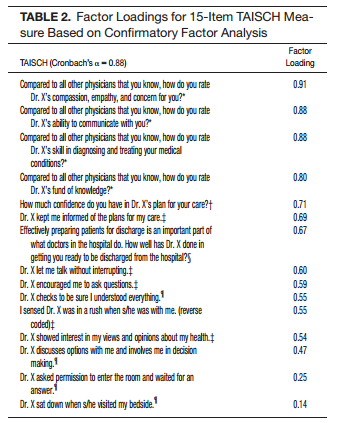

We have clamored for an instrument to function as the title purports, and while HCAHPS and PG do serve a useful role, they don’t deliver results that enable better practice at the doctor level. The Tool to Assess Inpatient Satisfaction with Care from Hospitalists (TAISCH) went through various stages of development. When tested, the survey assessed 203 patients and 29 hospitalists (minimum two consecutive days of care). The survey encompassed 15 elements, underwent validation, and had a Cronbach’s alpha of 0.88:

If you review the questions above, you will note they don’t resemble what we have become accustomed to with current scales. They resonate with bedside practice and oblige a more useful purpose in doctor assessment.

Uncertainties remain, as in all studies, such as real time completion and auditing, applying the survey to patients of different cultures and ability, and testing for wider scale validation. But those hurdles would apply in any “survey 2.0” investigation, and they are more a feature than a bug. Incidentally, the TAISCH did NOT correlate with PG scores. Hmmm.

In conclusion, I will let the authors sum up their findings:

TAISCH allows for obtaining patient satisfaction data that are highly attributable to specific hospitalist providers. The data collection method also permits high response rates so that input comes from almost all patients. The timeliness of the TAISCH assessments also makes it possible for real-time service recovery, which is impossible with other commonly used metrics assessing patient satisfaction. Our next step will include testing the most effective way to provide feedback to providers and to coach these individuals so as to improve performance.

We must not only ponder how to incorporate ourselves within the house of medicine writ large (we already perform well here), but also how to engage in a proactive manner to serve up better policy. The tools we forge from our policy choices should translate to measurement that is more precise, improve care delivery, and heck, facilitate more deserving salaries–to create a job we want to keep for life.

The effort above moves us a small step in the right direction. Nice work and I hope vested individuals review the publication and begin to mull over how we can apply real time bedside surveys, allowing for tweaks based on specialty, for the betterment of data reporting and compensation at the highest levels.

PS–I want to suggest a new title to the paper: A study to bring out with the old, and in with the new–and don’t let the door hit you on the way out (insert snicker here).

[…] posted on The Hospitalist Leader […]

[…] http://blogs.hospitalmedicine.org/Blog/hospitals-kowtow-to-press-ganey-and-hcahps-should-we/ […]

Thanks for this interesting post. The point about patients’ confusion over, and difficulty identifying, doctors—even with pictures—was quite compelling.

I have some reactions to the TAISCH validation as summarized here and at http://onlinelibrary.wiley.com/doi/10.1002/jhm.2220/abstract.

A Cronbach’s alpha of 0.88 indicates not that the 15 survey items are measuring the same general construct (and thus form a valid single scale), but only that they are highly correlated. This is not just semantics. It relates to the old correlation/causation problem (e.g., http://xkcd.com/552/ ). Consider the spurious reasons why strong correlations can crop up on surveys.

Patients who go through the 15 items mechanically will tend to give similar answers across the board. This is a well-known risk with surveys, one that in its extreme is known in the industry as “straightlining.” (“Halo effect” may also apply.) Similarly, a fair percentage of survey-takers exhibit the sort of bias by which they give overly consistent answers so as not to appear contradictory. Item correlations can even be inflated merely because two questions appear close together on a list. In survey research I’ve found these tendencies to be disconcertingly strong and liable to appear among respondents of widely different backgrounds and ages.

At least as important, one has to be skeptical when two topics that on their face seem so distinct (“compassion, empathy, and concern” vs. “skill in diagnosing and treating”) are treated as if virtually interchangeable. Factor analysis is one of the most subjective of all statistical tools, requiring at least as much art as science in its application. The choices made here were clearly not the only reasonable choices.

(I should probably also point out that, despite what the abstract reports, a combination of an r of 0.91 and a p of 0.51 is in my book a mathematical impossibility, and Betas of 11 and 12 likely indicate errors as well.)

My guess is that we will be hearing much more about this topic: the validity and reliability of the TAISCH are far from settled. My critiques aside, this topic holds great importance for providers and consumers and all such work towards the best evidence-based measures is of value.

Roland B. Stark

Senior Research Analyst

ReInforced Care, Inc.

Thanks Roland.

I passed your comment along to the authors for a response to the issues you raised.

Brad

Thank you for your interest in our work. First, we would like to thank you for pointing out the oddities in the correlation coefficient numbers and the betas.There was indeed a mistake in the final copy that resulted from typos. We are working with the journal to have them corrected. That said, the substantive results of our study do not change – just a few of the numbers. A revised epub should appear online shortly.

As for the reliability and validity findings from our study, we acknowledge that there are additional techniques that may be employed to address some of the concerns that you have raised (e.g. Item Response Theory methodology). Some such methods were considered and were felt to be beyond the scope of the current study and instead may be incorporated in future work. We feel our study provides an important contribution to the field and we hope that it is just the beginning. We agree that both the “validity and reliability of the TAISCH are far from settled”, as is always the case with the first publication related to a new scale or measurement metric. We are actively engaged in additional work in this area and we are well aware that others are too.